记录三维点云配准的经典Iterative Closest Point(ICP)算法。

之前记录了一个简单的基于marker的配准算法,但是这个算法非常初级,而且要求坐标是成组排序的。在实际应用中就会比较麻烦,一般只是用于手工选取极少数marker点来进行配准。而ICP算法依赖于点之间的几何关系,而非点的索引顺序,因此在较多的点的配准上更加方便。因为我们可以通过简单的强度过滤就筛选出绝大部分marker,然后再利用ICP算法进行配准,既方便又准确。

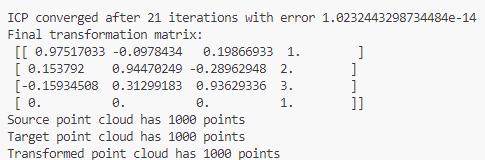

效果如上图所示,红色为source_points,绿色为 target_points,通过ICP算法可以得到从 source 到 target 的变换矩阵,通过应用该矩阵对 source再次进行变换,可以看到能够与 target_points重合。

可以看到对于包含1000个点的点云配准,ICP21次迭代后就完成了,基本就是几秒钟的时间。这对于一般成像的基于marker的通道配准足够了。

代码如下:

import numpy as np

import open3d as o3d

import copy

# 生成示例点云并打乱顺序

def generate_sample_point_clouds(n_points=1000):

# 生成随机源点云

source_points = np.random.rand(n_points, 3) * 10

# 打乱源点云顺序

np.random.shuffle(source_points)

source = o3d.geometry.PointCloud()

source.points = o3d.utility.Vector3dVector(source_points)

# 生成目标点云(通过随机变换)

rotation = o3d.geometry.get_rotation_matrix_from_xyz([0.3, 0.2, 0.1])

translation = np.array([1.0, 2.0, 3.0])

target_points = (source_points @ rotation.T) + translation

# 打乱目标点云顺序

np.random.shuffle(target_points)

target = o3d.geometry.PointCloud()

target.points = o3d.utility.Vector3dVector(target_points)

return source, target

# ICP算法实现

def icp(source, target, max_iterations=50, threshold=0.01):

"""

实现ICP算法

参数:

source: 源点云 (Open3D PointCloud)

target: 目标点云 (Open3D PointCloud)

max_iterations: 最大迭代次数

threshold: 收敛阈值

返回:

transformation: 最终变换矩阵 (4x4)

"""

source_points = np.asarray(source.points)

target_points = np.asarray(target.points)

# 初始化变换矩阵

transformation = np.eye(4)

for i in range(max_iterations):

# 构建KD树以查找最近点

target_tree = o3d.geometry.KDTreeFlann(target)

correspondences = []

# 寻找最近点对

for j, point in enumerate(source_points):

[_, idx, _] = target_tree.search_knn_vector_3d(point, 1)

correspondences.append([j, idx[0]])

# 提取对应点

src_corr = source_points[[c[0] for c in correspondences]]

tgt_corr = target_points[[c[1] for c in correspondences]]

# 计算质心

src_centroid = np.mean(src_corr, axis=0)

tgt_centroid = np.mean(tgt_corr, axis=0)

# 去中心化

src_centered = src_corr - src_centroid

tgt_centered = tgt_corr - tgt_centroid

# 计算协方差矩阵

H = src_centered.T @ tgt_centered

# 使用SVD分解计算旋转矩阵

U, _, Vt = np.linalg.svd(H)

rotation = Vt.T @ U.T

# 计算平移向量

translation = tgt_centroid - rotation @ src_centroid

# 更新变换矩阵

delta_transform = np.eye(4)

delta_transform[:3, :3] = rotation

delta_transform[:3, 3] = translation

# 应用变换

transformation = delta_transform @ transformation

source_points = (rotation @ source_points.T).T + translation

# 检查收敛

error = np.mean(np.linalg.norm(src_corr - tgt_corr, axis=1))

if error < threshold:

print(f"ICP converged after {i+1} iterations with error {error}")

break

# 更新源点云

source.points = o3d.utility.Vector3dVector(source_points)

return transformation

# 应用变换矩阵进行点云配准

def apply_transformation(source, transformation):

"""

应用变换矩阵到源点云

参数:

source: 源点云 (Open3D PointCloud)

transformation: 变换矩阵 (4x4)

返回:

transformed: 配准后的点云

"""

transformed = copy.deepcopy(source)

transformed.transform(transformation)

return transformed

# 可视化点云

def visualize_point_clouds(source, target, transformed, offset=[0.0, 0.0, 0.0]):

"""

可视化点云,添加偏移以区分配准后的点云

参数:

source: 原始源点云 (红色)

target: 目标点云 (绿色)

transformed: 配准后的点云 (蓝色)

offset: 配准点云的偏移量,仅用于可视化

"""

source = copy.deepcopy(source)

target = copy.deepcopy(target)

transformed = copy.deepcopy(transformed)

# 打印点云点数以调试

print(f"Source point cloud has {len(source.points)} points")

print(f"Target point cloud has {len(target.points)} points")

print(f"Transformed point cloud has {len(transformed.points)} points")

# 应用偏移(仅用于可视化)

if offset != [0.0, 0.0, 0.0]:

transformed_points = np.asarray(transformed.points) + np.array(offset)

transformed.points = o3d.utility.Vector3dVector(transformed_points)

source.paint_uniform_color([1, 0, 0]) # 红色

target.paint_uniform_color([0, 1, 0]) # 绿色

transformed.paint_uniform_color([0, 0, 1]) # 蓝色

# 显示所有点云

o3d.visualization.draw_geometries([source, target, transformed],

window_name="ICP Registration with Shuffled Points",

width=800, height=600)

# 单独显示配准后的点云和目标点云,便于检查

o3d.visualization.draw_geometries([target, transformed],

window_name="Target and Transformed Point Clouds",

width=800, height=600)

def main():

# 生成点云并打乱顺序

source, target = generate_sample_point_clouds()

# 复制源点云用于变换

source_copy = copy.deepcopy(source)

# 运行ICP算法

transformation = icp(source_copy, target)

print("Final transformation matrix:\n", transformation)

# 应用变换进行配准

transformed_source = apply_transformation(source, transformation)

# 可视化,添加小偏移以区分蓝色点云

visualize_point_clouds(source, target, transformed_source, offset=[0.1, 0.1, 0.1])

if __name__ == "__main__":

main()

此处评论已关闭